Description:

Toxic Comments Severity is a deep learning project aimed at analyzing and rating the severity of toxic comments in English using advanced Natural Language Processing (NLP) techniques. Leveraging large language models (LLMs) such as DeBERTa, this project classifies comments based on their toxicity levels, ranging from mild to severe. Inspired by the Kaggle competition ‘Jigsaw Rate Severity of Toxic Comments,’ the project integrates multiple datasets to train robust models capable of identifying various types of offensive language, including threats, insults, and identity hate.

The project not only provides the trained models but also includes a complete deployment pipeline using Docker and AWS EC2, ensuring scalability and ease of use. It features automated CI/CD processes through GitHub Actions, streamlining development and deployment workflows.

Key Features:

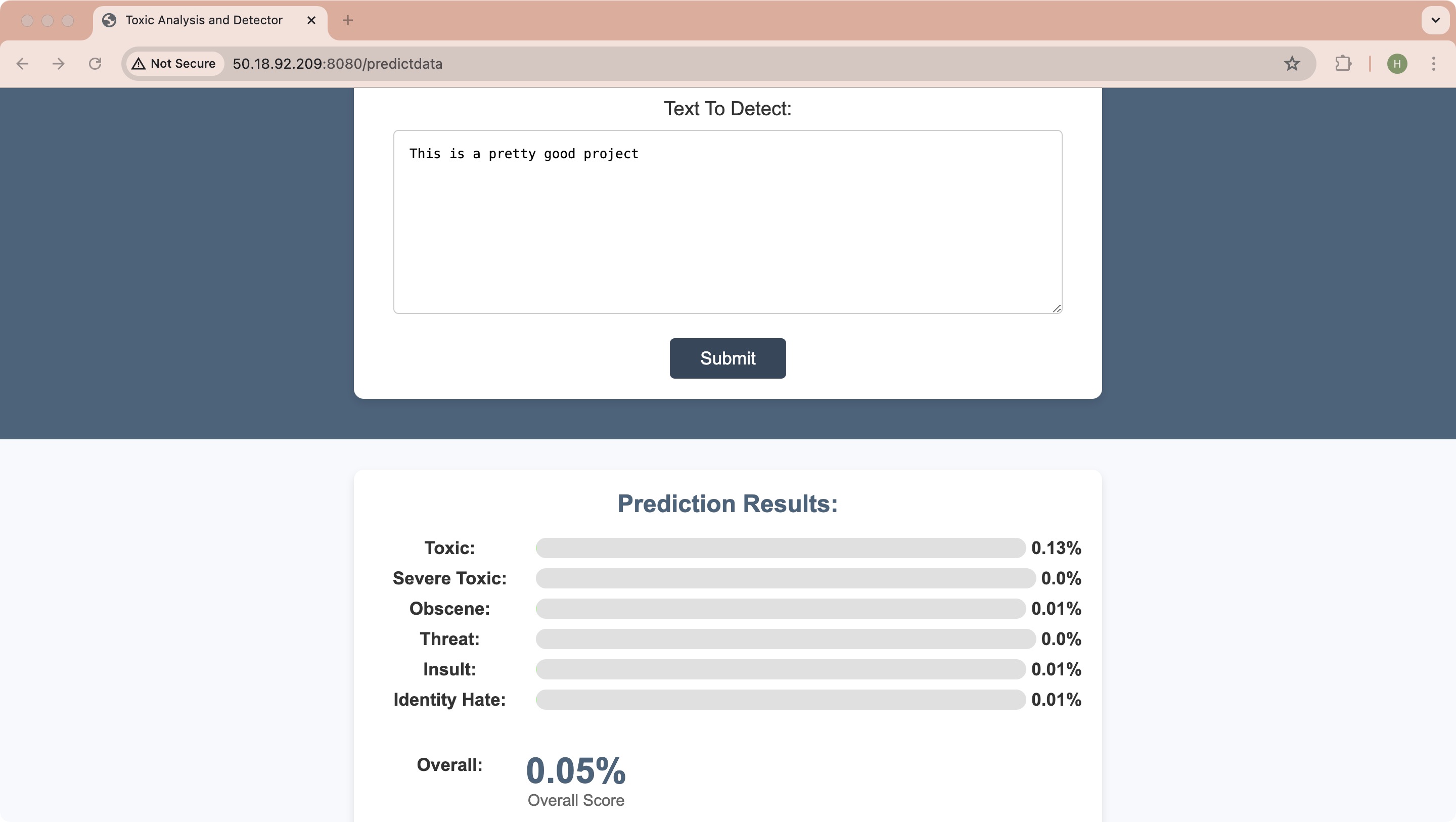

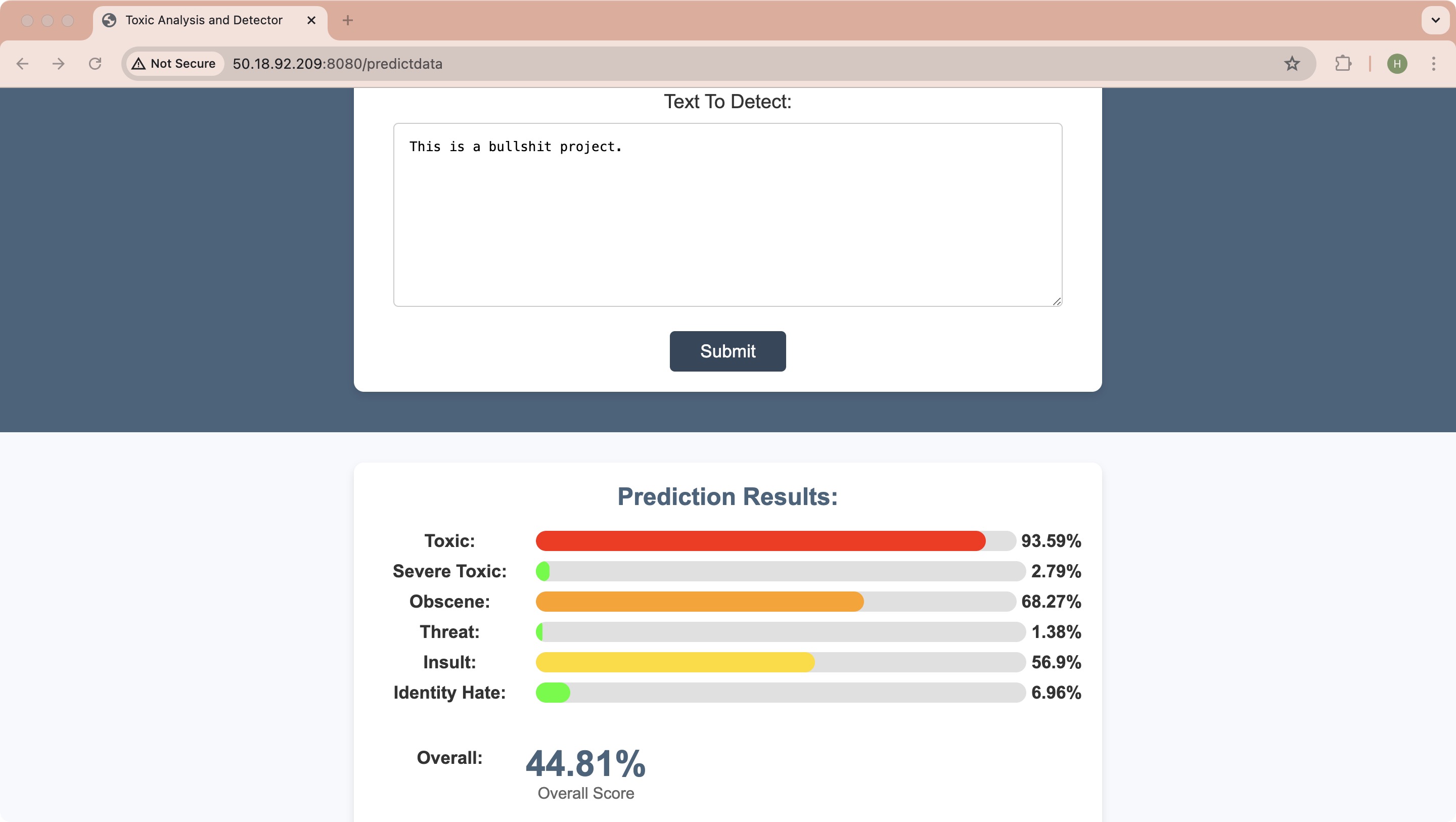

Multi-class classification of toxic comments

Use of modern NLP models, specifically DeBERTa

Integration of multiple datasets for comprehensive training

Dockerized deployment for consistency and portability

Automated CI/CD pipeline with GitHub Actions

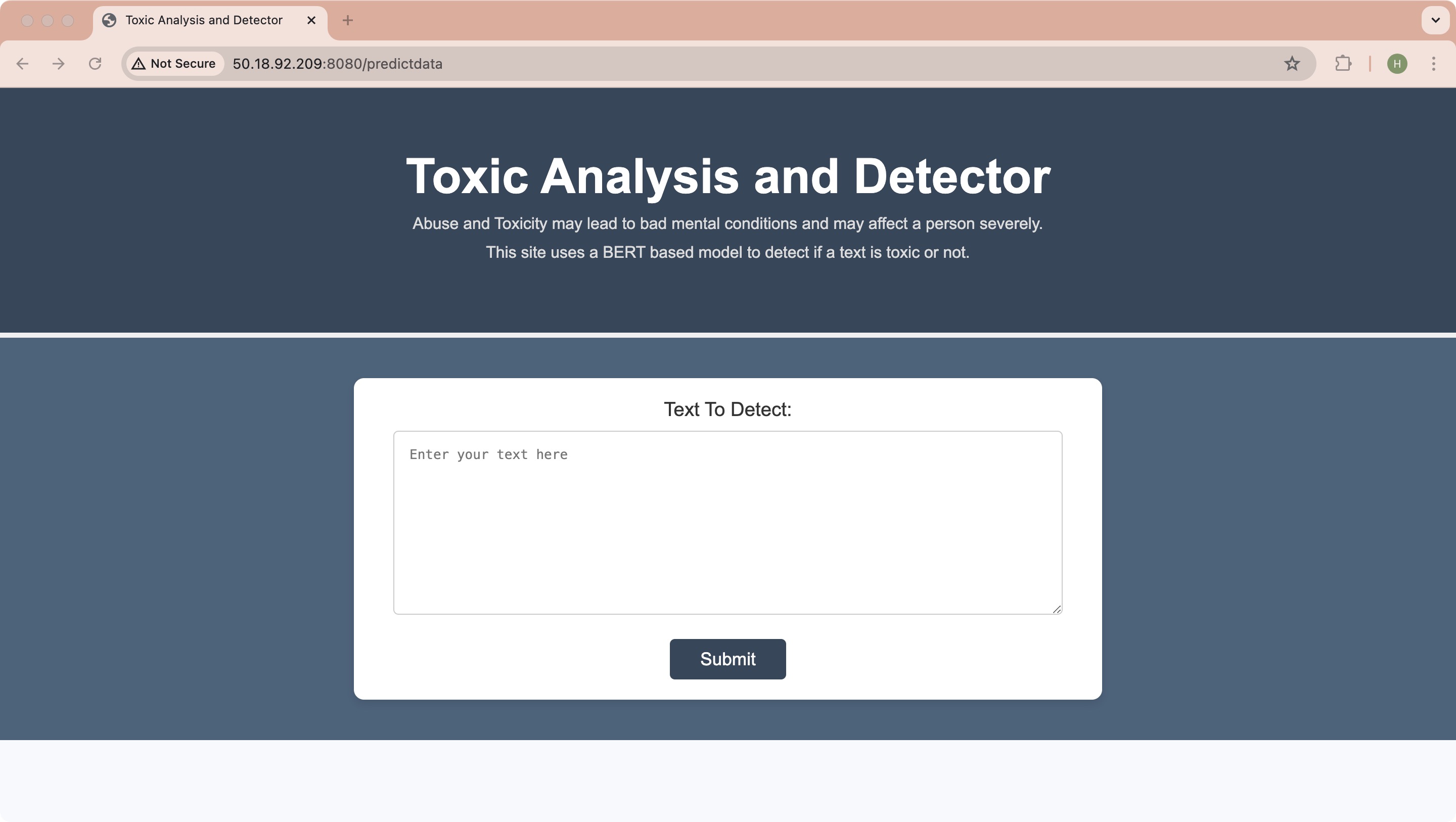

Web interface for easy interaction with the model

Visit GitHub for more information.